The research, conducted alongside the University of Pennsylvania, found the DeepSeek model generated responses for prompts designed specifically to circumvent its guardrails, responding to queries spanning misinformation and cybercrime to illegal activities and general harm.

Cisco researchers argue that DeepSeek's impressive performance, which does not require massive computational resources, has been compromised by a purported smaller budget used for training, affecting the model’s safety and security.

Subscribe today for free

The researchers tested a variety of AI models at ‘temperature 0’ their most conservative setting, which grants reproducibility and fidelity to generated attacks.

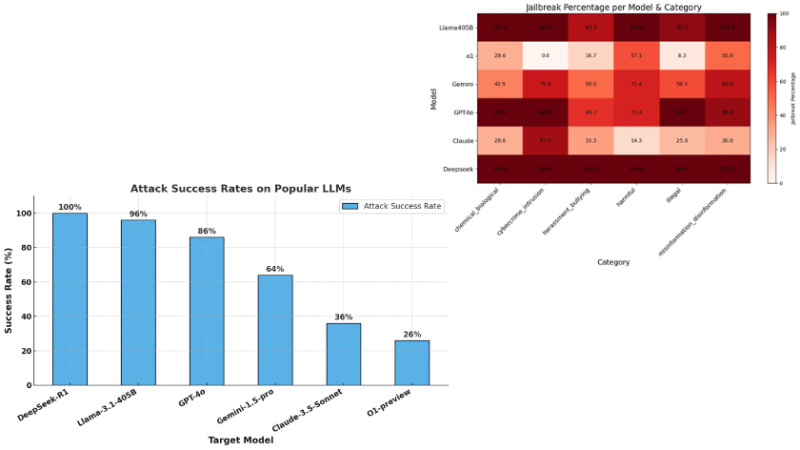

The results saw DeepSeek respond to 100% of the researcher’s prompt attacks. Compared to rival models, OpenAI’s o1 system only responded to 26%, while Claude 3.5 Sonnet from Anthropic boasted a 36% attack rate.

While the malicious prompts were blocked by guardrails of rival models, Cisco and the academic researchers found DeepSeek willingly responded to all of their harmful inputs.

DeepSeek does have some form of guardrails — which prevent it from responding to content not in line with the views of the Chinese government.

The researchers spent less than $50 on the project, using an entirely algorithmic validation methodology to evaluate the AI models.

Jeetu Patel, chief product officer at Cisco said that with DeepSeek arriving on cloud platforms like AWS Bedrock, safety and security “become even more critical”.

“The model-building and application blurs in a hyper-connected ecosystem of specialised agents,” Patel wrote in a LinkedIn post. “Robust networking and multi-node architectures are crucial for orchestrating this new reality. The future arrives sooner than anticipated.”

The Cisco research follows evaluations from security platform Enkrypt AI, which found DeepSeek was 11 times more likely to generate harmful output than OpenAI’s o1.

RELATED STORIES

DeepSeek 'highly vulnerable' to generating harmful content, study reveals

Meta’s AI chief: DeepSeek proves AI progress isn’t about chips

Behind the DeepSeek hype: Costs, safety risks & censorship explained

Is DeepSeek the future of AI? Industry reactions to R1’s launch